Meta Takes Down Russian Troll Farm: Disrupting Disinformation

Meta Takes Down Russian Troll Farm: Disrupting Disinformation. In a significant move against online manipulation, Meta, the parent company of Facebook and Instagram, has successfully dismantled a sprawling Russian troll farm responsible for spreading disinformation and interfering in global affairs.

This operation, which has been active for years, employed sophisticated tactics to sow discord and influence public opinion, targeting audiences across the globe.

The troll farm’s activities included creating fake accounts, spreading propaganda, and manipulating online conversations to advance a pro-Russian agenda. They targeted specific groups with tailored content, exploiting social media algorithms to amplify their reach and impact. Meta’s investigation revealed a complex network of accounts, groups, and pages designed to deceive users and influence public discourse.

The Troll Farm’s Operations: Meta Takes Down Russian Troll Farm

Meta’s takedown of the Russian troll farm exposed a sophisticated network of disinformation operations. These operations involved creating and spreading false information across various social media platforms, aiming to influence public opinion and sow discord.

Meta’s takedown of a Russian troll farm highlights the ongoing battle against misinformation online. While the tech giant tackles these threats, the clock is ticking for Biden to make key decisions on student loans, a pressing issue that impacts millions of Americans.

With both domestic and international challenges demanding attention, it’s a reminder that navigating a complex world requires addressing multiple fronts simultaneously.

Methods of Disinformation

The troll farm employed a range of methods to spread disinformation. These included:

- Creating fake accounts:The troll farm created numerous fake social media accounts to amplify their messages and make them appear more legitimate. These accounts often used stolen or generated profile pictures and names to deceive users.

- Manipulating hashtags:The troll farm used hashtags to promote their narratives and make them trend on social media platforms. This helped to spread their messages to a wider audience.

- Using bots:The troll farm used automated bots to post and share content, further amplifying their messages and creating the illusion of widespread support.

- Spreading misinformation:The troll farm created and spread false information about political events, social issues, and other topics. This misinformation was often designed to incite anger, fear, or distrust.

Target Audiences and Goals

The troll farm targeted audiences in various countries, including the United States, Europe, and Latin America. The goals of their operations included:

- Influence elections:The troll farm attempted to influence elections by spreading disinformation about candidates and parties. This was done by creating fake news stories, manipulating hashtags, and using bots to amplify their messages.

- Sow discord:The troll farm aimed to sow discord among different groups by spreading misinformation and inflammatory content. This was done to create social divisions and undermine trust in institutions.

- Promote Russian interests:The troll farm also aimed to promote Russian interests by spreading propaganda and positive narratives about the Russian government.

Comparison with Other Disinformation Campaigns

The troll farm’s operations were similar to those of other known disinformation campaigns, such as those conducted by the Internet Research Agency (IRA) and the Macedonian fake news factories. All of these campaigns used similar methods to spread disinformation, target specific audiences, and achieve their goals.

Meta’s takedown of the Russian troll farm highlights the ongoing battle against disinformation, and it’s a reminder that these efforts are crucial for maintaining democratic integrity. This battle extends beyond social media, as seen in the recent Arizona GOP’s blunder with Blake Masters, a move that could potentially hand Democrats a significant advantage in the upcoming election.

arizona gop hands democrats a gigantic gift in blake masters The fight against disinformation is a multi-faceted one, requiring vigilance from both individuals and institutions to protect our democratic processes.

However, the troll farm’s operations were particularly sophisticated and well-funded, allowing them to reach a wider audience and have a greater impact.

It’s a bit of a head-scratcher, right? Meta taking down a Russian troll farm while we’re all glued to our screens, watching a what I eat in a day TikTok that’s actually worth watching. It’s like we’re all so distracted by the shiny, curated lives on social media that we barely notice the real-world manipulation happening behind the scenes.

I guess that’s what makes it so effective, though, isn’t it? It’s a reminder that we need to be more aware of the content we consume and the platforms we use.

Meta’s Response and Actions

Meta’s response to the discovery of the Russian troll farm was swift and decisive. The company took a multi-pronged approach, focusing on identifying the perpetrators, removing their accounts and content, and implementing measures to prevent similar incidents in the future.

Evidence and Justification

Meta relied on a combination of evidence to justify its actions. This included:

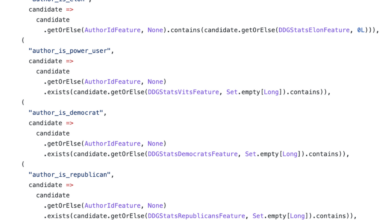

- Unusual patterns of activity: Meta’s algorithms detected a significant number of accounts exhibiting unusual activity, such as creating fake profiles, spreading misinformation, and engaging in coordinated campaigns.

- Linguistic analysis: Meta’s analysts used linguistic analysis techniques to identify common patterns in the content shared by the troll farm accounts, revealing a clear connection to Russian propaganda efforts.

- Network analysis: Meta’s network analysis tools helped to identify connections between accounts and expose the structure of the troll farm’s operation, revealing the coordination and manipulation tactics employed.

Impact on Troll Farm Operations

Meta’s actions had a significant impact on the troll farm’s operations.

- Account removal: Meta removed thousands of accounts associated with the troll farm, significantly reducing its reach and ability to spread disinformation.

- Content removal: Meta removed vast amounts of content generated by the troll farm, including fake news articles, propaganda, and inflammatory posts.

- Disruption of networks: Meta’s actions disrupted the networks the troll farm used to coordinate its activities, making it harder for them to operate effectively.

Impact on Disinformation Spread, Meta takes down russian troll farm

Meta’s response has had a tangible impact on the spread of disinformation on its platforms.

- Reduced visibility: The removal of accounts and content has significantly reduced the visibility of the troll farm’s propaganda, limiting its reach and influence.

- Increased awareness: Meta’s actions have raised awareness about the threat of disinformation and encouraged users to be more critical of the information they encounter online.

- Enhanced detection capabilities: Meta has continued to invest in improving its detection capabilities, making it harder for future troll farms to operate undetected.

Impact and Implications

The takedown of the Russian troll farm by Meta has far-reaching implications, highlighting the ongoing battle against disinformation and the potential for online manipulation to influence public opinion and political discourse. This event serves as a stark reminder of the vulnerabilities inherent in online platforms and the need for proactive measures to combat the spread of misinformation.

Impact on Public Opinion and Political Discourse

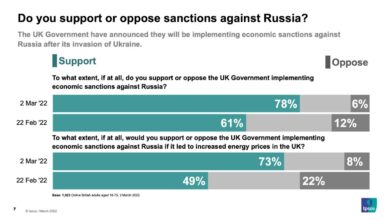

The activities of troll farms can have a significant impact on public opinion and political discourse. By spreading propaganda, engaging in coordinated campaigns, and manipulating online conversations, these groups can sow discord, undermine trust in institutions, and influence the outcome of elections.

The Russian troll farm targeted in this case used various tactics to achieve its objectives, including:

- Creating fake accounts to amplify their messages and make them appear more popular and legitimate.

- Spreading divisive content to polarize public opinion and sow discord among different groups.

- Promoting conspiracy theories and misinformation to undermine trust in established institutions and narratives.

- Coordinated attacks on individuals and organizations perceived as threats to their agenda.

These activities can have a significant impact on public opinion by shaping perceptions, influencing voting behavior, and eroding trust in democratic institutions. For example, during the 2016 US presidential election, Russian troll farms were found to have interfered with the campaign by spreading disinformation and propaganda aimed at influencing voters.

The impact of these activities is not limited to elections; they can also contribute to the polarization of society and undermine social cohesion.

The Role of Social Media Platforms

Social media platforms have become integral to modern life, serving as avenues for communication, information sharing, and entertainment. However, their ubiquitous nature has also made them susceptible to manipulation, particularly in the form of disinformation campaigns. Understanding the role of social media platforms in the spread of disinformation is crucial for mitigating its harmful effects.

The Role of Social Media Platforms in the Spread of Disinformation

Social media platforms provide a fertile ground for the dissemination of disinformation due to their vast reach, user engagement, and algorithmic features. The ease of creating and sharing content, coupled with the algorithms designed to maximize engagement, can amplify misleading information, even if it is factually inaccurate.

- Viral Spread:Disinformation often spreads rapidly through social media due to its viral nature. The sharing of misleading content by users can lead to a snowball effect, reaching a large audience in a short time.

- Echo Chambers:Social media algorithms can create echo chambers, where users are primarily exposed to information that aligns with their existing beliefs. This can reinforce biases and make individuals more susceptible to accepting false information.

- Bot Networks:Automated accounts, known as bots, can be used to amplify disinformation by spreading content, manipulating trends, and creating the illusion of widespread support for false narratives.

Responsibilities of Social Media Companies in Combating Online Manipulation

Social media companies bear significant responsibility in combating online manipulation, given their role in facilitating the spread of disinformation. They can take various steps to mitigate the risks associated with their platforms.

- Content Moderation:Social media platforms need robust content moderation policies to identify and remove disinformation, hate speech, and other harmful content. This can involve using automated tools and human reviewers to assess the veracity of information.

- Transparency and Accountability:Platforms should be transparent about their algorithms and how they impact content visibility. This transparency can help users understand the factors influencing the information they encounter and make informed decisions.

- User Education:Social media companies can play a role in educating users about disinformation and how to identify it. Providing resources and tools to help users evaluate information critically can empower them to make informed choices.

Comparison of Approaches to Addressing Disinformation

Different social media platforms have adopted varying approaches to address disinformation. Some platforms have focused on proactive content moderation, while others have prioritized user education and transparency.